Genie is out of the bottleby Sriram Krishnan

In a prior tech blog, we had discussed the architecture of our petabyte-scale data warehouse in the cloud. Salient features of our architecture include the use of Amazon’s Simple Storage Service (S3) as our "source of truth", leveraging the elasticity of the cloud to run multiple dynamically resizable Hadoop clusters to support various workloads, and our horizontally scalable Hadoop Platform as a Service called Genie.

Today, we are pleased to announce that Genie is now open source, and available to the public from the Netflix OSS GitHub site.

What is Genie?

Genie provides job and resource management for the Hadoop ecosystem in the cloud. From the perspective of the end-user, Genie abstracts away the physical details of various (potentially transient) Hadoop resources in the cloud, and provides a REST-ful Execution Service to submit and monitor Hadoop, Hive and Pig jobs without having to install any Hadoop clients. And from the perspective of a Hadoop administrator, Genie provides a set of Configuration Services, which serve as a registry for clusters, and their associated Hive and Pig configurations.

Why did we build Genie?

There are two main reasons why we built Genie. Firstly, we run multiple Hadoop clusters in the cloud to support different workloads at Netflix. Some of them are launched as needed, and are hence transient - for instance, we spin up “bonus” Hadoop clusters nightly to augment our resources for ETL (extract, transform, load) processing. Others are longer running (viz. our regular “SLA” and “ad-hoc” clusters) - but may still be re-spun from time to time, since we work under the operating assumption that cloud resources may go down at any time. Users need to discover the latest incarnations of these clusters by name, or by the type of workloads that they support. In the data center, this is generally not an issue since Hadoop clusters don’t come up or go down frequently, but this is much more common in the cloud.

Secondly, end-users simply want to run their Hadoop, Hive or Pig jobs - very few of them are actually interested in launching their own clusters, or even installing all the client-side software and downloading all the configurations needed to run such jobs. This is generally true in both the data center and the cloud. A REST-ful API to run jobs opens up a wealth of opportunities, which we have exploited by building web UIs, workflow templates, and visualization tools that encapsulate all our common patterns of use.

What Genie Isn’t

Genie is not a workflow scheduler, such as Oozie. Genie’s unit of execution is a single Hadoop, Hive or Pig job. Genie doesn’t schedule or run workflows - in fact, we use an enterprise scheduler (UC4) at Netflix to run our ETL.

Genie is not a task scheduler, such as the Hadoop fair share or capacity schedulers either. We think of Genie as a resource match-maker, since it matches a job to an appropriate cluster based on the job parameters and cluster properties. If there are multiple clusters that are candidates to run a job, Genie will currently choose a cluster at random. It is possible to plug in a custom load balancer to choose a cluster more optimally - however, such a load balancer is currently not available.

Finally, Genie is not an end-to-end resource management tool - it doesn’t provision or launch clusters, and neither does it scale clusters up and down based on their utilization. However, Genie is a key complementary tool, serving as a repository of clusters, and an API for job management.

How Genie Works

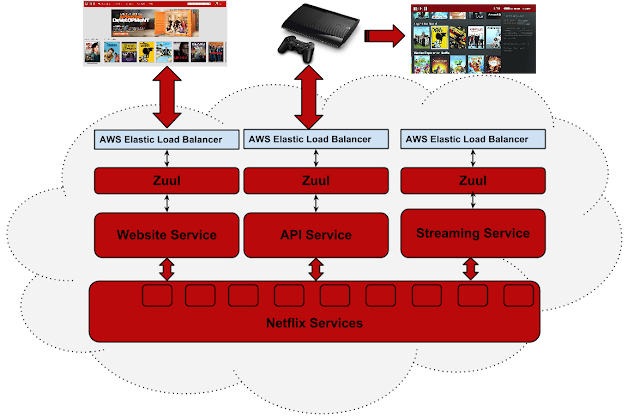

The following diagram explains the core components of Genie, and its two classes of Hadoop users - administrators, and end-users.

Genie itself is built on top of the following Netflix OSS components:

- Karyon, which provides bootstrapping, runtime insights, diagnostics, and various cloud-ready hooks,

- Eureka, which provides service registration and discovery,

- Archaius, for dynamic property management in the cloud,

- Ribbon, which provides Eureka integration, and client-side load-balancing for REST-ful interprocess communication, and

- Servo, which enables exporting metrics, registering them with JMX (Java Management Extensions), and publishing them to external monitoring systems such as Amazon's CloudWatch.

Genie can be cloned from GitHub, built, and deployed into a container such as Tomcat. But it is not of much use unless someone (viz. an administrator) registers a Hadoop cluster with it. Registration of a cluster with Genie is as follows:

- Hadoop administrators first spin up a Hadoop cluster, e.g. using the EMR client API.

- They then upload the Hadoop and Hive configurations for this cluster (*-site.xml’s) to some location on S3.

- Next, the administrators use the Genie client to discover a Genie instance via Eureka, and make a REST-ful call to register a cluster configuration using a unique id, and a cluster name, along with a few other properties - e.g. that it supports “SLA” jobs, and the “prod” metastore. If they are creating a new metastore configuration, then they may also have to register a new Hive or Pig configuration with Genie.

After a cluster has been registered, Genie is now ready to grant any wish to its end-users - as long as it is to submit Hadoop jobs, Hive jobs, or Pig jobs!

End-users use the Genie client to launch and monitor Hadoop jobs. The client internally uses Eureka to discover a live Genie instance, and Ribbon to perform client-side load balancing, and to communicate REST-fully with the service. Users specify job parameters, which consist of:

- A job type, viz. Hadoop, Hive or Pig,

- Command-line arguments for the job,

- A set of file dependencies on S3 that can include scripts or UDFs (user defined functions).

Users must also tell Genie what kind of Hadoop cluster to pick. For this, they have a few choices - they can use a cluster name or a cluster ID to pin to a specific cluster, or they can use a schedule (e.g. SLA) and a metastore configuration (e.g. prod), which Genie will use to pick an appropriate cluster to run a job on.

Genie creates a new working directory for each job, stages all the dependencies (including Hadoop, Hive and Pig configurations for the chosen cluster), and then forks off a Hadoop client process from that working directory. It then returns a Genie job ID, which can be used by the clients to query for status, and also to get an output URI, which is browsable during and after job execution (see below). Users can monitor the standard output and error of the Hadoop clients, and also look at Hive and Pig client logs, if anything went wrong.

![]()

The Genie execution model is very simple - as mentioned earlier, Genie simply forks off a new process for each job from a new working directory. Other than simplicity, important benefits of this approach include isolation of jobs from each other and from Genie, and easy accessibility of standard output, error and job logs for our end-users (since they are browsable from the output URIs). We made a decision not to queue up jobs in Genie - if we had implemented a job queue, we would have had to implement a fair-share or capacity scheduler for Genie as well, which is already available at the Hadoop level. The downside of this approach is that a JVM is spawned for each job, which implies that Genie can only run a finite number of concurrent jobs on an instance, based on available memory.

Deployment at Netflix

Genie scales horizontally using ASGs (Auto-Scaling Groups) in the cloud, which helps us run several hundreds of concurrent Hadoop jobs in production at Netflix, with the help of Asgard for cloud management and deployment. We use Asgard (see screenshot below) to pick minimum, desired and maximum instances (for horizontal scalability) in multiple availability zones (for fault tolerance). For Genie server pushes, Asgard provides the concept of a “sequential ASG”, which lets us route traffic to new instances of Genie once a new ASG is launched, and turn off traffic to old instances by marking the old ASG out of service.

Using Asgard, we can also set up scaling policies to handle variable loads. The screenshot below shows a sample policy, which increases the number of Genie instances (by one) if the average number of running jobs per instance is greater than or equal to 25.

Usage at Netflix

Genie is being used in production at Netflix to run several thousands of Hadoop jobs daily, processing hundreds of terabytes of data. The screenshot below (from our internal Hadoop investigative tool, code named “Sherlock”) shows some of our clusters over a period of a few months.

The blue line shows one of our SLA clusters, while the orange line shows our main ad-hoc cluster. The red line shows another ad-hoc cluster, with a new experimental version of a fair-share scheduler. Genie was used to route jobs to one of the two ad-hoc clusters at random, and we measured the impact of the new scheduler on the second ad-hoc cluster. When we were satisfied with the performance of the new scheduler, we spun up another larger consolidated ad-hoc cluster with the new scheduler (also shown by the orange line), and all new ad-hoc Genie jobs were now routed to this latest incarnation. The two older clusters were terminated once all running jobs were finished (we call this a “red-black” push).

Summary

Even though Genie is now open source, and has been running in production at Netflix for months, it is still a work in progress. We think of the initial release as version 0. The data model for the services is fairly generic, but definitely biased towards running at Netflix, and in the cloud. We hope for community feedback and contributions to broaden its applicability, and enhance its capabilities.

We will be presenting Genie at the 2013 Hadoop Summit during our session titled “Genie - Hadoop Platform as a Service at Netflix”, and demoing Genie and other tools that are part of the Netflix Hadoop toolkit at the Netflix Booth. Please join us for the presentation, and/or feel free to stop by the booth, chat with the team, and provide feedback.

If you are interested in working on great open source software in the areas of big data and cloud computing, please take a look at jobs.netflix.com for current openings!

References

Genie OSS

Genie Wiki: Getting Started

Netflix Open Source Projects

@NetflixOSS Twitter Feed

.png)