- introduction to NetflixOSS platform

- 2013 roadmap and sneak peek into what’s to come

NetflixOSS Open House

Optimizing the Netflix API

About a year ago the Netflix API team began redesigning the API to improve performance and enable UI engineering teams within Netflix to optimize client applications for specific devices. Philosophies of the redesign were introduced in a previous post about embracing the differences between the different clients and devices.

This post is part one of a series on the architecture of our redesigned API.

Goals

We had multiple goals in creating this system, as follows:

Reduce Chattiness

One of the key drivers in pursuing the redesign in the first place was to reduce the chatty nature of our client/server communication, which could be hindering the overall performance of our device implementations.

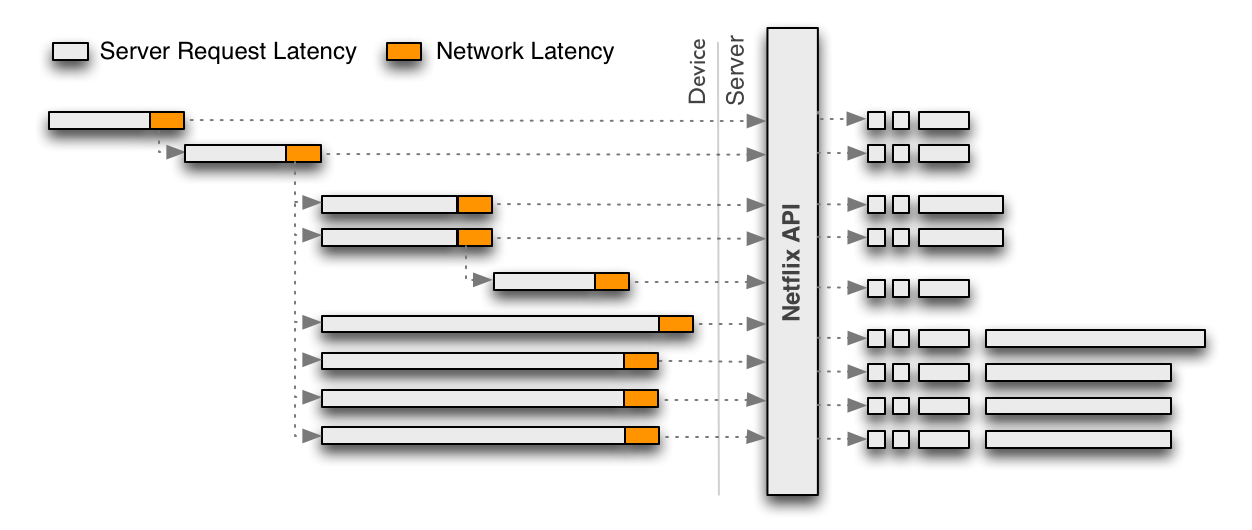

Due to the generic and granular nature of the original REST-based Netflix API, each call returns only a portion of functionality for a given user experience, requiring client applications to make multiple calls that need to be assembled in order to render a single user experience. This interaction model is illustrated in the following diagram:

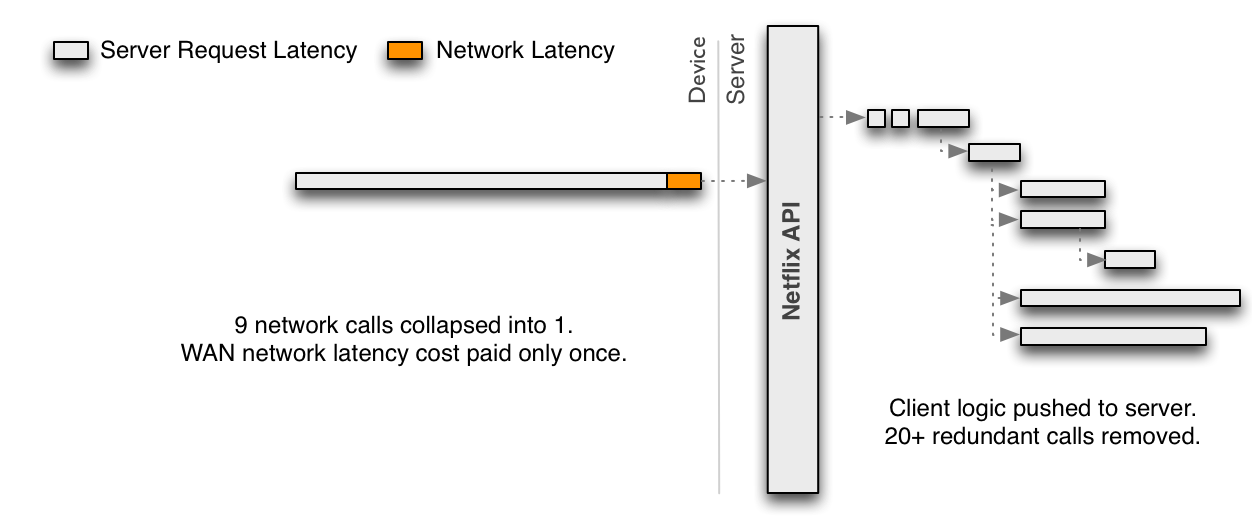

To reduce the chattiness inherent in the REST API, the discrete requests in the diagram above should be collapsed into a single request optimized for a given client. The benefit is that the device then pays the price of WAN latency once and leverages the low latency and more powerful hardware server-side. As a side effect, this also eliminates redundancies that occur for every incoming request.

A single optimized request such as this must embrace server-side parallelism to at least the same level as previously achieved through multiple network requests from the client. Because the server-side parallelized requests are running in the same network, each one should be more performant than if it was executed from the device. This must be achieved without each engineer implementing an endpoint needing to become an expert in low-level threading, synchronization, thread-safety, concurrent data structures, non-blocking IO and other such concerns.

Distribute API Development

A single team should not become a bottleneck nor need to have expertise on every client application to create optimized endpoints. Rapid innovation through fast, decoupled development cycles across a wide variety of device types and distributed ownership and expertise across teams should be enabled. Each client application team should be capable of implementing and operating their own endpoints and the corresponding requests/responses.

Mitigate Deployment Risks

The Netflix API is a Java application running on hundreds of servers processing 2+ billion incoming requests a day for millions of customers around the world. The system must mitigate risks inherent in enabling rapid and frequent deployment by multiple teams with minimal coordination.

Support Multiple Languages

Engineers implementing endpoints come from a wide variety of backgrounds with expertise including Javascript, Objective-C, Java, C, C#, Ruby, Python and others. The system should be able to support multiple languages at the same time.

Distribute Operations

Each client team will now manage the deployment lifecycle of their own web service endpoints. Operational tools for monitoring, debugging, testing, canarying and rolling out code must be exposed to a distributed set of teams so teams can operate independently.

Architecture

To achieve the goals above our architecture distilled into a few key points:

- dynamic polyglot runtime

- fully asynchronous service layer

- functional reactive programming model

[1] Dynamic Endpoints

All new web service endpoints are now dynamically defined at runtime. New endpoints can be developed, tested, canaried and deployed by each client team without coordination (unless they depend on new functionality from the underlying API Service Layer shown at item 5 in which case they would need to wait until after those changes are deployed before pushing their endpoint).

[2] Endpoint Code Repository and Management

Endpoint code is published to a Cassandra multi-region cluster (globally replicated) via a RESTful Endpoint Management API used by client teams to manage their endpoints.

[3] Dynamic Polyglot JVM Language Runtime

Any JVM language can be supported so each team can use the language best suited to them.

The Groovy JVM language was chosen as our first supported language. The existence of first-class functions (closures), list/dictionary syntax, performance and debuggability were all aspects of our decision. Moreover, Groovy provides syntax comfortable to a wide range of developers, which helps to reduce the learning curve for the first language on the platform.

[4 & 5] Asynchronous Java API + Functional Reactive Programming Model

Embracing concurrency was a key requirement to achieve performance gains but abstracting away thread-safety and parallel execution implementation details from the client developers was equally important in reducing complexity and speeding up their rate of innovation. Making the Java API fully asynchronous was the first step as it allows the underlying method implementations to control whether something is executed concurrently or not without the client code changing. We chose a functional reactive approach to handling composition and conditional flows of asynchronous callbacks. Our implementation is modeled after Rx Observables.

[6] Hystrix Fault Tolerance

As we have described in a previous post, all service calls to backend systems are made via the Hystrix fault tolerance layer (which was recently open sourced, along with its dashboard) that isolates the dynamic endpoints and the API Service Layer from the inevitable failures that occur while executing billions of network calls each day from the API to backend systems.

The Hystrix layer is inherently mutlti-threaded due to its use of threads for isolating dependencies and thus is leveraged for concurrent execution of blocking calls to backend systems. These asynchronous requests are then composed together via the functional reactive framework.

[7] Backend Services and Dependencies

The API Service Layer abstracts away all backend services and dependencies behind facades. As a result, endpoint code accesses “functionality” rather than a “system”. This allows us to change underlying implementations and architecture with no or limited impact on the code that depends on the API. For example, if a backend system is split into 2 different services, or 3 are combined into one, or a remote network call is optimized into an in-memory cache, none of these changes should affect endpoint code and thus the API Service Layer ensures that object models and other such tight-couplings are abstracted and not allowed to “leak” into the endpoint code.

Summary

The new Netflix API architecture is a significant departure from our previous generic RESTful API.

Dynamic JVM languages combined with an asynchronous Java API and the functional reactive programming model have proven to be a powerful combination to enable safe and efficient development of highly concurrent code.

The end result is a fault-tolerant, performant platform that puts control in the hands of those who know their target applications the best.

Following posts will provide further implementation and operational details about this new architecture.

If this type of work interests you we are always looking for talented engineers.

Reactive Programming at Netflix

Over the last year, Netflix has reinvented our client-server interaction model. One of the key building blocks of our platform is Microsoft's open-source Reactive Extensions library (Rx). Netflix is a big believer in the Rx model, because Rx has made it much easier for us to build complex asynchronous programs.

Asynchronous Programming is Hard

Events and AJAX requests are sequences of values that are pushed from the producer to the consumer asynchronously. The consumer reacts to the data as it comes in, which is why asynchronous programming is also called Reactive Programming. Every web application is a reactive program, because code reacts to events like mouse clicks, key presses, and the asynchronous arrival of data from the server.

Asynchronous programming is hard, because logical units of code have to be split across many different callbacks so that they can be resumed after async operations complete. To make matters worse, most programming languages have no facilities for propagating asynchronous errors. Asynchronous errors aren't thrown on the stack, which means that try/catch blocks are useless.

Events are Collections

The Reactive Extensions library models each event as a collection of data rather than a series of callbacks. This is a revolutionary idea, because once you model an event as a collection you can transform events in much the same way you might transform in-memory collections. Rx provides developers with a SQL-like query language that can be used to sequence, filter, and transform events. Rx also makes it possible to propagate and handle asynchronous errors in a manner similar to synchronous error handling.

Rx is currently available for JavaScript and Microsoft's .NET platform, and we're using both flavors to power our PS3 and Windows 8 clients respectively. As Ben Christensen mentioned in his post "Optimizing the Netflix API", we've also ported Rx to the Java platform so that we can use it on the server. Today, Reactive Extensions is required learning for many developers at Netflix. We've developed an online, interactive tutorial for teaching our developers Rx, and we're opening it up to the public today.

Reactive Extensions at Netflix

On a very hot day in Brisbane, I gave an interview to Channel 9 during which I discussed Rx use at Netflix in-depth. I also discussed Falkor, a new protocol we've designed for client-server communication at Netflix. Falkor provides developers with a unified model for interacting with both local and remote data, and it's built on top of Rx.

In the coming weeks we'll be blogging more about Reactive Programming, our next generation data platform, and the Falkor protocol. Today Netflix is one of very few technology companies using reactive programming on both the server and the client. If you think this is as exciting as we do, join the team!

NetflixGraph Metadata Library: An Optimization Case Study

Here at Netflix, we serve more than 30 million subscribers across over 40 countries. These users collectively generate billions of requests per day, most of which require metadata about our videos. Each call we receive can potentially cull through thousands of video metadata attributes. In order to minimize the latency at which this data can be accessed, we generally store most of it directly in RAM on the servers responsible for servicing live traffic.

We have two main applications that package and deliver this data to the servers which enable all of our user experiences -- from playing movies on the thousands of devices we support to just checking out our selection with their cell phones:

- VMS, our Video Metadata Platform, and

- NetflixGraph, which contains data representable as a directed graph

This article specifically details how we achieved a 90% reduction in the memory footprint of NetflixGraph. The results of this work will be open-sourced in the coming months.

Optimization

We constantly need to be aware of the memory footprints on our servers at Netflix. NetflixGraph presented a great opportunity for experimentation with reduction of memory footprints. The lessons and techniques we learned from this exercise have had a positive impact towards other applications within Netflix and, we hope, can have applications outside of Netflix as well.

Investigation

The first step in the optimization of any specific resource is to become familiar with the biggest consumers of that resource. After all, it wouldn't make much sense to shrink a data structure that consumes a negligible amount of memory; it wouldn’t be an optimal use of engineering time.

We started by creating a small test application which loaded only sample NetflixGraph data, then we took a heap dump of that running process. A histogram from this dump (shown below in Eclipse Memory Analyzer) shows us the types of objects which are the largest memory consumers:

From this histogram, we can clearly see that HashMapEntry objects and arrays of HashMapEntry objects are our largest consumers by far. In fact, these structural elements themselves consumed about 83% of our total memory footprint. Upon inspection of the code, the reason for this finding was not surprising. The relationships between objects in our directed graph were generally represented with HashMaps, where HashSets of “to” objects were keyed by “from” objects. For example, the set of genres to which a video belongs would have been represented with a HashMap<Video, HashSet<Genre>>. In this map, the Video object representing “Captain America” might have been the key for a Set containing the Genres “Action”, “Adventure”, “Comic Books & Superheroes”, and maybe, in typical Netflix fashion, the very specific “Action & Adventure Comic Book Movies Depicting Scenes from World War II”.

Solution: Compact Encoded Data Representation

We knew that we could hold the same data in a more memory-efficient way. We created a library to represent directed-graph data, which we could then overlay with the specific schema we needed.

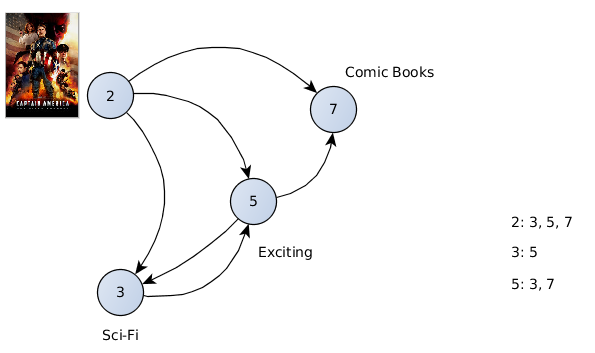

We start by numbering each of our unique objects from 1 to n. The value that each object gets assigned we refer to as an "ordinal". Once each object is numbered, we need a data structure which will maintain the relationships between ordinals. Let’s take an example: the figure below represents a few nodes in our graph which have been assigned ordinals and which are connected to each other by some property.

Internal to the graph data structure, we refer to each object only by its assigned ordinal. This way, we avoid using expensive 64-bit object references. Instead, the objects to which another object is connected can be represented by just a list of integers (ordinals). In the above diagram, we can see that the node which was assigned the ordinal “2” is connected to nodes 3, 5, and 7. These connections are of course fully representable by just the list of integers [ 3, 5, 7 ].

Our data structure maintains two arrays. One is an integer array, and the other is a byte array. Each object's connections are encoded into the byte array as delta-encoded variable-byte integers (more on this in the next paragraph). The integer array contains offsets into the byte array, such that the connections for the object represented by some ordinal are encoded starting at the byte indicated by offsetArray[ordinal].

Variable-byte encoding is a way to represent integers in a variable number of bytes, whereby smaller values are represented in fewer bytes. An excellent explanation is available here on Wikipedia. Because smaller values can be represented in fewer bytes, we benefit significantly if we can represent our connected ordinals with smaller values. If we sort the ordinals for some connection set in ascending order, we might represent each connection not by it's actual value, but by the difference between it's value and the previous value in the set. For example, if we had some ordinals [1, 2, 3, 5, 7, 11, 13], we would represent this with the values [1, 1, 1, 2, 2, 4, 2].

Of course, there’s more to our data than just nodes and connections. Each node is typed (e.g. Video, Genre, Character), and each type has a different set of properties. For example, a video may belong to several genres, which is one type of connection. But it also may depict several characters, which is a different type of connection.

|

| The character Captain America, above, is not the same node type as the movie Captain America: The First Avenger |

In order to represent these different types of nodes, and different properties for each node type, we define a schema. The schema tells us about each of our node types. For each node type, it also enumerates which properties are available to get a set of connections for.

When all connections for a node are encoded, the connection grouping for each of its properties are appended to the byte array in the order which they appear in the schema. Each group of integers representing these connections is preceded by a single integer, indicating the total number of bytes used to encode that property. In our earlier example, since each of the values [1, 1, 1, 2, 2, 4, 2] are representable with a single byte, this grouping would be preceded by the encoded value “7”, indicating that seven bytes are used to represent the connections for this property. This allows us to iteratively read how many bytes are in a given property, then skip that many bytes if we are not interested in that property.

At runtime, when we need to find the set of connections over some property for a given object, we go through the following steps:

- find the object's ordinal.

- look up the pointer into our byte array for this object.

- find the first property for the node type of this object in our schema.

- while the current property is not the property where interested in:

4a. read how many bytes are used to represent this property.

4b. increment our pointer by the value discovered in (4a). - move to the next property in the schema.

- iteratively decode values from the byte array, each time adding the current value to the previous value.

- look up the connected objects by the returned ordinals.

Results

When we dropped this new data structure in the existing NetflixGraph library, our memory footprint was reduced by 90%. A histogram of our test application from above, loading the exact same set of data, now looks like the following:

When to consider this solution

There is a potential disadvantage to this approach. In addition to memory footprint on our edge servers, another thing we constantly need to be cognizant of is CPU utilization. When we represented connections as HashSets, determining whether an object is connected to another object was an O(1) operation. To ask this question in the new way, our data structure requires an iteration over all values in the set, which is an O(n) operation.

Luckily, the vast majority of our access patterns for this data are full iterations over the sets, which are no slower now than they were in the previous implementation. In addition, the engineers for each of the teams responsible for maintaining our edge services are extremely vigilant, and our resource utilization is heavily scrutinized with sophisticated tools on each new deployment.

Conclusion

This article has discussed one of the approaches we took for compacting directed graph data in the context of one of our more recognizable data sets – deeply personalized genres. Any application with data sets which lend themselves to representation as directed graphs can benefit from this specific optimization. We will be open-sourcing the memory optimized graph component of this library in the coming months. Stay tuned!

By the way, if you’re interested in working with the amazing group of engineers who solve the scalability problems Netflix faces on a daily basis, we are looking for both a software and automation engineer on the Product Infrastructure team. At Netflix, you’ll be working with some of the most talented engineering teammates in the world. Visit http://jobs.netflix.com to get started!

Announcing Ribbon: Tying the Netflix Mid-Tier Services Together

by Allen Wang and Sudhir Tonse

Netflix embraces a fine-grained Service Oriented Architecture as the underpinning of its Cloud based deployment model. Currently, we run hundreds of such fine grained services that are collectively responsible in handling the customer facing requests via a few "Edge Services" such as the Netflix API Service. A lightweight REST based protocol is the choice for inter process communication amongst these services.

The Netflix Internal Web Service Framework (aka NIWS) forms the bedrock of this architecture in the realm of communication. Together with the previously announced Eureka, which aids in service discovery, NIWS provides all the components pertinent for making REST calls.

NIWS is comprised of a REST client and server framework, based on JSR-311 which is a RESTful API specification for Java. Our services use various payload data serialization formats such as Avro, XML, JSON, Thrift and Google Protocol Buffers. NIWS provides the mechanism for serializing and deserializing the data.

Today, we are happy to announce Ribbon as the latest offering of our hugely popular and growing Open Source libraries hosted on GitHub.

Ribbon, as a first cut, mainly offers the client side software load balancing algorithms which have been battle tested at Netflix along with a few of the other components that form our Inter Process Communication stack (aka NIWS). We plan to continue open sourcing the rest the of the NIWS stack in the coming months. Please note that the loadbalancers mentioned are the internal client-side loadbalancers used alongside Eureka that are primarily used for load balancing requests to our mid-tier services. For our public facing Edge Services we continue to use Amazon's ELB Service

Deployment Topology

Diagram: Typical (representative) deployment architecture at Netflix.

A typical deployment architecture at Netflix is a multi-region, multi-zone deployment which aids in better availability and resiliency.

The Amazon ELB provides load balancing for customer/device facing requests while internal mid-tier requests are handled via the Ribbon framework.

Eureka provides the service registry for all Netflix services. Ribbon clients are typically created and configured for each of the target services. Ribbon's Client component offers a good set of configuration options such as connection timeouts, retries, retry algorithm (exponential, bounded backoff) etc.

Ribbon comes built in with a pluggable and customizable LoadBalancing component. Some of the load balancing strategies offered are listed below, with more to follow.

- Simple Round Robin LB

- Weighted Response Time LB

- Zone Aware Round Robin LB

- Random LB

Battle Tested Features

The main benefit of Ribbon is that it offers a simple inter process communication mechanism with features built based on our operational learnings and experience in the Amazon Cloud. Ribbon's Zone Aware Load Balancer for example, is built with circuit tripping logic and can be configured to favor the target service instance based on Zone Affinity (i.e it favors the zone in which the calling service itself is hosted, thus benefiting from reduced latency and savings in cost). It monitors the operational behavior of the instances running in each zone and has the capability to quickly (at real time) drop an entire Zone out of rotation. This helps us be resilient in the face of Zone outages as described in a prior blog post.Zone Aware Load Balancer

The picture above shows the Zone Aware LoadBalancer in action. The LoadBalancerwill do the following when picking a server:

- The LoadBalancerwill calculate and examine zone stats of all available zones. If the active requests per server for any zone has reached a configured threshold, this zone will be dropped from the active server list. In case more than one zone has reached the threshold, the zone with the most active requests per server will be dropped.

- Once the the worst zone is dropped, a zone will be chosen among the rest with the probability proportional to its number of instances.

- A server will be returned from the chosen zone with a given Rule (A Ruleis a loadbalacing strategy, for example a simple Round Robin Rule)

Some of the features of Ribbon are listed below with more to follow.

- Easy integration with a Service Discoverycomponent such as Netflix's Eureka

- Runtime configuration using Archaius

- Operational Metrics exposed via JMX and published via Servo

- Multiple and pluggable serialization choices (via JSR-311, Jersey)

- Asynchronous and Batch operations (coming up)

- Automatic SLA framework (coming up)

- Administration/Metrics console (coming up)

If you would like to contribute to our highly scalable libraries and frameworks for ephemeral distributed environments, please take a look at http://netflix.github.com. You can follow us on twitter at @NetflixOSS.

We will be hosting a NetflixOSS Open House on the 6th of February, 2013 (Limited seats. RSVP needed).

We are constantly looking for great talent to join us and we welcome you to take a look at our Jobs page or contact @stonse for positions in the Cloud Platform Infrastructure team.

Resources

- Netflix Open Source Dashboard

- Ribbon

- Eureka (Service Discovery and Metadata)

- Archaius (Dynamic configurations)

- Hystrix (Latency and Fault Tolerance)

- Simian Army

Functional Reactive in the Netflix API with RxJava

Our recent post on optimizing the Netflix API introduced how our web service endpoints are implemented using a "functional reactive programming" (FRP) model for composition of asynchronous callbacks from our service layer.

This post takes a closer look at how and why we use the FRP model and introduces our open source project RxJava – a Java implementation of Rx (Reactive Extensions).

Embrace Concurrency

Server-side concurrency is needed to effectively reduce network chattiness. Without concurrent execution on the server, a single "heavy" client request might not be much better than many "light" requests because each network request from a device naturally executes in parallel with other network requests. If the server-side execution of a collapsed "heavy" request does not achieve a similar level of parallel execution it may be slower than the multiple "light" requests even accounting for saved network latency.

Futures are Expensive to Compose

Futures are straight-forward to use for a single level of asynchronous execution but they start to add non-trivial complexity when they're nested.

Conditional asynchronous execution flows become difficult to optimally compose (particularly as latencies of each request vary at runtime) using Futures. It can be done of course, but it quickly becomes complicated (and thus error prone) or prematurely blocks on 'Future.get()', eliminating the benefit of asynchronous execution.

Callbacks Have Their Own Problems

Callbacks offer a solution to the tendency to block on Future.get() by not allowing anything to block. They are naturally efficient because they execute when the response is ready.

Similar to Futures though, they are easy to use with a single level of asynchronous execution but become unwieldy with nested composition.

Reactive

Functional reactive offers efficient execution and composition by providing a collection of operators capable of filtering, selecting, transforming, combining and composing Observable's.

The Observable data type can be thought of as a "push" equivalent to Iterable which is "pull". With an Iterable, the consumer pulls values from the producer and the thread blocks until those values arrive. By contrast with the Observable type, the producer pushes values to the consumer whenever values are available. This approach is more flexible, because values can arrive synchronously or asynchronously.

The Observable type adds two missing semantics to the Gang of Four's Observer pattern, which are available in the Iterable type:

- The ability for the producer to signal to the consumer that there is no more data available.

- The ability for the producer to signal to the consumer that an error has occurred.

With these two simple additions, we have unified the Iterable and Observable types. The only difference between them is the direction in which the data flows. This is very important because now any operation we perform on an Iterable, can also be performed on an Observable. Let's take a look at an example ...

Observable Service Layer

The Netflix API takes advantage of Rx by making the entire service layer asynchronous (or at least appear so) - all "service" methods return an Observable<T>.

Making all return types Observable combined with a functional programming model frees up the service layer implementation to safely use concurrency. It also enables the service layer implementation to:

- conditionally return immediately from a cache

- block instead of using threads if resources are constrained

- use multiple threads

- use non-blocking IO

- migrate an underlying implementation from network based to in-memory cache

This can all happen without ever changing how client code interacts with or composes responses.

In short, client code treats all interactions with the API as asynchronous but the implementation chooses if something is blocking or non-blocking.

This next example code demonstrates how a service layer method can choose whether to synchronously return data from an in-memory cache or asynchronously retrieve data from a remote service and callback with the data once retrieved. In both cases the client code consumes it the same way.

Retaining this level of control in the service layer is a major architectural advantage particularly for maintaining and optimizing functionality over time. Many different endpoint implementations can be coded against an Observable API and they work efficiently and correctly with the current thread or one or more worker threads backing their execution.

The following code demonstrates the consumption of an Observable API with a common Netflix use case – a grid of movies:

That code is declarative and lazy as well as functionally "pure" in that no mutation of state is occurring that would cause thread-safety issues.

The API Service Layer is now free to change the behavior of the methods 'getListOfLists', 'getVideos', 'getMetadata', 'getBookmark' and 'getRating' – some blocking others non-blocking but all consumed the same way.

In the example, 'getListOfLists' pushes each 'VideoList' object via 'onNext()' and then 'getVideos()' operates on that same parent thread. The implementation of that method could however change from blocking to non-blocking and the code would not need to change.

RxJava

RxJava is our implementation of Rx for the JVM and is available in the Netflix repository in Github.

It is not yet feature complete with the .Net version of Rx, but what is implemented has been in use for the past year in production within the Netflix API.

We are open sourcing the code as version 0.5 as a way to acknowledgement that it's not yet feature complete. The outstanding work is logged in the RxJava Issues.

Documentation is available on the RxJava Wiki including links to material available on the internet.

Some of the goals of RxJava are:

- Stay close to the original Rx.Net implementation while adjusting naming conventions and idioms to Java

- All contracts of Rx should be the same

- Target the JVM not a language. The first languages supported (beyond Java itself) are Groovy, Clojure, Scala and JRuby. New language adapters can be contributed.

- Support Java 5 (to include Android support) and higher with an eventual goal to target a build for Java 8 with its lambda support.

Here is an implementation of one of the examples above but using Clojure instead of Groovy:

Summary

Functional reactive programming with RxJava has enabled Netflix developers to leverage server-side conconcurrency without the typical thread-safety and synchronization concerns. The API service layer implementation has control over concurrency primitives, which enables us to pursue system performance improvements without fear of breaking client code.

RxJava is effective on the server for us and it spreads deeper into our code the more we use it.

We hope you find the RxJava project as useful as we have and look forward to your contributions.

If this type of work interests you we are always looking for talented engineers.

First NetflixOSS Meetup

The inaugural Netflix Open Source Software (NetflixOSS) meetup was held at Netflix headquarters in Los Gatos California on February 6th 2013. We had over 200 attendees; individual developers, academics, vendors and companies, from small startups to the largest global corporations.

For those who missed it, a video of the presentations made at the event is included below. We also had visitors from TechCrunch and GigaOM writing stories about us.

How to host a Tech Meetup

We were very happy with the way the meeting itself worked out, but Paul Guth wrote a wonderful blog post on what he saw. http://constructolution.wordpress.com/2013/02/06/netflix-teaches-everyone-how-to-host-a-tech-meetup/“Netflix has once again set the bar. Not with their technology this time – but with their organizing. I just got back from the first meetup of the NetflixOSS group - and it was spectacular.”

The inside story of how this meetup happened provides another example of how Netflix culture works. We don’t have a recipe for a meetup, there was no master plan with a Steve Jobs like attention to getting the fine details exactly right. There was no process. Instead, we told people the high level goals of what we were trying to do, got out of the way and trusted that they could figure it out or ask for clarification as needed. On the day of the event we had excellent facilities support setting everything up, making sure people knew where to go, and staying up very late to put it all away. We had wonderful asian finger food (sushi!) and plenty of beer and wine. There was signage and large monitors in 10 separate demo stations with the engineers that own individual NetflixOSS projects. Ruslan led the event overall and structured the talks, Adrian worked on the what/why message and how it works slides, Joris from PR got journalists to come along. Betty coordinated the event setup. The Netflix design team came up with a NetflixOSS logo, T-shirt design and TechTattoo stickers. Leslie turned the designs into actual T-shirts and stickers. A lot of work from a lot of people went into the meetup, but it was fun and frictionless, with immediate buy-in. It sounds too good to be true but good ideas “get legs” at Netflix, and take off in a way that isn’t often seen at other companies. Netflix gets out of the way of innovation. We get more done with fewer people in less time, and this is a key ingredient to maintaining high talent density, because employees aren’t being frustrated by bureaucracy, the default behavior is to trust their judgement. Just like with NetflixOSS components, we apply similar philosophy with our people - whole is greater than the sum of the parts. Together, the amazing people that we have are able to accomplish much more, than all of their individual accomplishments put together.

NetflixOSS History

Ruslan opened by showing everyone an email exchange. In mid 2011 Jordan sent an email to some managers asking what the process was to open-source a project, the reply was that there is no process or policy, just go ahead. Jordan then asked if he should just put on Apache license headers and show it to legal, and the response was “If you think legal review is going to improve your code quality, go ahead!”. When the code was ready it was released in late 2011 as Curator. During 2012 another 15 projects were added, and three more already in 2013.We were releasing the platform that runs Netflix streaming one piece at a time, and other people started using bits and pieces individually. The transition we are making in 2013 is that we are putting the puzzle pieces together as a coherent platform, branding it NetflixOSS and will make it easy to adopt it as a complete Platform as a Service solution. NetflixOSS supports teams of developers who are deploying large scale globally distributed applications. Netflix has hundreds of developers deploying on tens of thousands of instances across several AWS Regions to support the global streaming product.

Why Open Source the Platform?

In 2009-2010 Netflix was figuring out the architecture from first principles, trying to follow AWS guidelines and building a platform based on scalabiity experience some of our engineers had gained at places like Yahoo, eBay, and Google. In 2011 and 2012 we started talking about how we had built a large scale cloud-native application, and other companies began to follow our patterns. What was bleeding edge innovation in 2009 became accepted best practices by 2012, and is becoming a commodity in 2013-2014. Many competing cloud platforms have appeared, and by making it easy for people to adopt NetflixOSS we hope to become part of a larger ecosystem rather than having the industry pass us by.When we started Open Sourcing pieces of our infrastructure, we had several goals for the program - outlined in this Techblog post. We’re seeing great adoption and engagement across many developers and companies, and increasing stream of feedback and external contributions. There is a growing number of 3rd party projects that utilize NetflixOSS components, some of them are listed on our Github page.

Putting it all Together

We released NetflixOSS bit by bit, and we don’t have a naming convention, so it can be hard to figure out how they fit together. Adrian presented a series of slides that explained how they fit together to form a build system, a set of services that support the platform, a collection of libraries that applications build against, and testing and maintenance functionality.The Portability Question

When we built our platform we focused on what mattered to Netflix: scalability so we could roll out our streaming product globally; functionality so that our developers could build faster; and availability by leveraging multiple availability zones in each region. We regard portability as an issue we can defer, since AWS is so far ahead of the market in terms of functionality and scale, and we don’t want to hobble ourselves in the short term. However as we share the NetflixOSS platform, there is demand from end users and vendors to begin porting some of the projects for use in datacenter and hybrid cloud configurations. For the foreseeable future Netflix is planning to stay on AWS, but in the long term portability may be useful to us as well.A New Project - Denominator

We announced a brand new project at the Meetup; we are working on multi-region failover and traffic sharing patterns to provide higher availability for the streaming service during regional outages caused by our own bugs and AWS issues. To do this we need to directly control the DNS configuration that routes users to each region and each zone. When we looked at the features and vendors in this space we found that we were already using AWS Route53, which has a nice API but is missing some advanced features; Neustar UltraDNS, which has a SOAP based API; and DynECT, which has an awkwardly structured REST API. We couldn’t find a Java based API that abstracted all these vendors into the common set of capabilities that we are interested in, so we are creating one. The idea is that any feature that is supported by more than one vendor API is the highest common denominator, and that functionality can be switched between vendors as needed, or in the event of a DNS vendor outage.With most NetflixOSS projects, we are running the code in production at the point where we open source it on github. In the case of Denominator we are already sharing the code with DNS vendors who are helping us get the abstraction model right, and we will make it generally available during the development process. Denominator is a Java library for controlling DNS, we are building it to be as portable as possible, with few dependencies. We will embed it in services such as Edda, which collects the historical state of our cloud. This project is being led by Adrian Cole, who is well known as the author of the cross platform jClouds open source project. He recently joined Netflix and is bringing a lot of valuable experience to our platform team.

Announcements

In addition to announcing Denominator, the next NetflixOSS Meetup will be on March 13th, and signup was enabled during the meeting. We are planning some surprise announcements for that event, and within two days we already have over 200 attendees registered.Video and Slides

The video contains the introduction by Ruslan and Adrian, the lightning talks by each engineer and an extended demonstration of the Asgard console.Netflix Queue: Data migration for a high volume web application

- High Data Consistency

- High Reliability and Availability

- No downtime for reads and writes

- No degradation in performance of the existing application

- Reads: Only from SimpleDB (Source of truth)

- Writes: SimpleDB and Cassandra

- Reads: SimpleDB (Source of truth) and Cassandra

- Writes: SimpleDB and Cassandra

- Reads: Cassandra (Source of truth)

- Writes: Cassandra

Announcing EVCache: Distributed in-memory datastore for Cloud

EVCache is a distributed in-memory caching solution based on memcached&spymemcached that is well integrated with Netflix OSS andAWS EC2 infrastructure. Today we are announcing the open sourcing of EVCache client library on Github.

EVCache is an abbreviation for:

Ephemeral - The data stored is for a short duration as specified by its TTL(Time To Live).

Volatile - The data can disappear any time (Evicted).

Cache– An in-memory key-value store.

The advantages of distributed caching are:

- Faster response time compared to data being fetched from source/database

- Reduces the load and number of servers needed to handle the requests as most of the requests are served by the cache

- Increases the throughput of the services fronted by the cache

Please read more about EVCache from our earlier blog post for more details.

What is an EVCache App?

EVCache App is a logical grouping of one or more memcached instances (servers). Each instance can be a

- EVCache Server (to be open sourced soon) running memcached and a Java sidecar app

- EC2 instance running memcached

- ElastiCache instance

- instance that can talk memcahced protocol (eg. Couchbase, MemcacheDB)

Each app is associated with a name. Though it is not recommended, a memcached instance can be shared across multiple EVCache Apps.

What is an EVCache Client?

EVCache client manages all the operations between an Java application and EVCache App.

What is an EVCache Server?

EVCache Server is an EC2 instance running an instances of memcached and a Java Sidecar application. The sidecar is responsible for interacting with Eureka, monitoring the memcached process and collecting and reporting performance data to the Servo. This will be Open Sourced soon.

Generic EVCache Deployment

The Figure 1 shows an EVCache App consisting of 3 memcached nodes with an EVCache client connecting to it.Multi-Cluster EVCache Deployment

The Figure 2 shows an EVCache App in 2 Clusters (A & B) with 3 memcached nodes in each Cluster. Data is replicated between the two clusters. To achieve low latency, reliability and isolation all the EC2 instances for a cluster should be in the same availability zone. This way if an availability zone is having any issues the performance of the other zone is not impacted. In a scenario where we lose instances in one cluster, we can dynamically set that cluster to “write only” and direct all the read traffic to other zone. This ensures that latency and cache hit rate is not impacted.

EVCache Deployment using Eureka

The Figure 3 shows an EVCache App in 3 Clusters (A, B & C) with 3 EVCache servers in each Cluster. Each cluster is in an availability zones. An EVCache server (to be open sourced soon) consists of a memcached instance and sidecar app. The sidecar app interacts with Eureka Server and monitor the memcached process.Netflix OSS - All Netflix Open Source Software

EVCache - Sources & EVCache Wiki - Documentation & Configuration

memcached - A high-performance in-memory data store

spymemcached - Java Client to memcached

Archaius - Library for configuration management API

Eureka - Discovery and Managing EVCache servers

Servo - Application Monitoring Library

If you like building infrastructure components like this, for a service that millions of people use world wide, take a look at http://jobs.netflix.com.

Denominator: A Multi-Vendor Interface for DNS

Overview

Command Line Usage

chmod 755 denominator./denominator-p ultradns -c username -c password zone list |

./denominator-p route53 --zone foo.com. record add --name hostname.foo.com. --typeA --ec2-public-ipv4 |

./denominator... record add hostname.foo.com 3600 IN A 1.2.3.4 |

Library Structure and Features

manager = Denominator.create("ultradns", credentials(username, password)); |

for(Iterator<String> zone = manager.getApi().getZoneApi().list(); zone.hasNext();) { processZone(zone.next());} |

mxData.getPreference();mxData.get("preference"); |

Karyon: The nucleus of a Composable Web Service

Overview

As explained in previous blog posts, Netflix employs a fine grained Service Oriented Architecture. We also believe in and strive towards employing a homogenous architecture. This has the benefit of code and component reuse as well as operational efficiency gained via common understanding and usage patterns.Given that we have several teams at Netflix that have hundreds of web services built and deployed, how do we ensure a homogenous architecture and share common patterns?

Meet Karyon, the nucleus of our Cloud Platform stack.

Karyon, in the context of molecular biology is essentially "a part of the cell containing DNA and RNA and responsible for growth and reproduction."

At Netflix, Karyon is a framework and library that essentially contains the blueprint of what it means to implement a cloud ready web service. All the other fine grained web services and applications that form our SOA graph can essentially be thought as being cloned from this basic blueprint.

Today, we would like to announce the general availability of Karyon as part of our rapidly growing NetflixOSS stack. Thus far we have open sourced many components that form the Netflix Cloud Platform, and with the release of Karyon, you now have a way to glue them all together.

Karyon has the following main ingredients.

- Bootstrapping, Libraries and Lifecycle Management (via Governator)

- Runtime Insights and Diagnostics (via built in Admin Console)

- Pluggable Web Resources (via JSR-311 and Jersey)

- Cloud-Ready hooks (Service Registration and Discovery, HealthCheck hooks etc.)

Bootstrapping, Libraries and Lifecycle Management

A typical web service needs to load configurations and initialize itself and all its dependencies. Karyon makes this easy with its Component driven architecture.In Karyon, the Application represents a standalone application or main class of a Web Service. Each Application may consist of many Components.

Each Component can be thought of as belonging to a very complex graph of interdependent components. Karyon relies on Governator to help walk this graph and manage the lifecycle of these components.

You can read more about Governator at our Github location.

Admin Console (Runtime Insights and Diagnostics)

Karyon contains a pluggable Admin Console component that provides a visual interface for observing and managing other components.The current open sourced version contains only a first-cut stand-in console. We will soon replace this with the more feature rich Console that we use inhouse.

For example, the Admin Console provides a visual interface for the following components.

- Libraries (i.e. all the libraries loaded, their versions and other meta data)

- Properties Management (i.e Properties inspection and Runtime editing via Archaius)

- JMX Console

- and more ... (Servo metrics, Ribbon Clients and Stats, Services discovered via Eureka etc.)

JMX Console

Properties Console

The screenshot above shows the Properties Console. Karyon offers Archaius as its default Properties Management Component. Note: The more advanced Admin Console shown in the screenshot above will be released in the coming weeks.Pluggable Web Resources

JSR-311 allows for a very flexible and pluggable Web Resource container. Karyon essentially utilizes and extends this via Ribbon.Cloud Ready

Karyon makes it easy to integrate with Service Discovery components such as Netflix's Eureka along with several of the components mentioned below that aid in building a resilient and scalable web service. Karyon forms the nucleus that holds all these together.Upcoming features

On top of bootstrapping and the features mentioned above, at Netflix, Karyon also provides a flexible runtime semantics, notably:- Request context propagation across services. (when used with Ribbon.)

- Enforcing/reporting SLAs.

- Request tracing.

- Request throttling.

Getting Started

The best way to get started with Karyon and building Web Services is to clone the "HelloWorld" sample web service available at github. The Getting Started page offers more details.Conclusion

Karyon aims to provide the basic ingredients that form the nucleus of most web services and applications. While Karyon provides the main ingredients for a cloud ready web service, it does not out-of-the-box showcase many of the other components that form the Platform Stack.A typical web service at Netflix uses many of the building blocks and design patterns in the Netflix Cloud Platform stack. For example:

- Runtime Configuration of Properties via Archaius

- Inter Process Communication and Resilient Load Balancing via Ribbon

- Latency and Fault tolerance via Hystrix

- Functional Reactive Programming via RxJava.

If you would like to contribute to our highly scalable libraries and frameworks for ephemeral distributed environments, please take a look at http://netflix.github.com. You can follow us on twitter at @NetflixOSS.

We will be hosting a NetflixOSS Open House on the 13th of March, 2013 (Limited seats. Please RSVP.) during which we will showcase Karyon and other OSS projects.

We are constantly looking for great talent to join us and we welcome you to take a look at our Jobs page or contact @stonse for positions in the Cloud Platform Infrastructure team.

Python at Netflix

Developers at Netflix have the freedom to choose the technologies best suited for the job. More and more, developers turn to Python due to its rich batteries-included standard library, succinct and clean yet expressive syntax, large developer community, and the wealth of third party libraries one can tap into to solve a given problem. Its dynamic underpinnings enable developers to rapidly iterate and innovate, two very important qualities at Netflix. These features (and more) have led to increasingly pervasive use of Python in everything from small tools using boto to talk to AWS, to storing information with python-memcached and pycassa, managing processes with Envoy, polling restful APIs to large applications with requests, providing web interfaces with CherryPy and Bottle, and crunching data with scipy. To illustrate, here are some current projects taking advantage of Python:

Alerting

The Central Alert Gateway (CAG) is a RESTful web application written in Python to which any process can post an alert, though the vast majority of alerts are triggered by our telemetry system, Atlas (which will be open sourced in the near future). CAG can take these alerts and based on configuration send them via email to interested parties, dispatch them to our notification system to page on call engineers, suppress them if we’ve already alerted someone, or perform automated remediation actions (for example, reboot or terminate an EC2 instance if it starts appearing unhealthy). At our scale, we generate hundreds of thousands of alerts every day and handling as many of these automatically -- and making sure to only notify people of new issues rather than telling them again about something they’re aware of -- is critical to our production efficiency (and quality of life).

Chaos Gorilla

We’ve talked before about how we use Chaos Monkey to make sure our services are resilient to the termination of any small number of instances. As we’ve improved resiliency to instance failures, we’ve been working to set the reliability bar much, much higher. Chaos Gorilla integrates with Asgard and Edda, and allows us to simulate the loss of an entire availability zone in a given region. This sort of failure mode -- an AZ either going down or simply becoming inaccessible to other AZs -- happens once in a blue moon, but it’s a big enough problem that simulating it and making sure our entire ecosystem is resilient to that failure is very important to us.

Security Monkey and Howler Monkey

Security Monkey is designed to keep track of configuration history and alert on changes in EC2 security-related policies such as security groups, IAM roles, S3 access control lists, etc. This makes our Cloud Security team very happy, since without it there’s no way to know when, or how, a change occurred in the environment.

Howler Monkey is designed to automatically discover and keep track of SSL certificates in our environments and domain names, no matter where they may reside, and alert us as we get close to an SSL certificate’s expiration date, with flexible and powerful subscription and alerting mechanisms. Because of it, we moved from having an SSL certificate expire surprisingly and with production impact about once a quarter to having no production outages due to SSL expirations in the last eighteen months. It’s a simple tool that makes a huge difference for us and our dozens of SSL certificates.

Chronos

We push hard to always increase our speed of innovation, and at the same time reduce the cost of making changes in the environment. In the datacenter days, we forced every production change to be logged in a change control system because the first question everyone asks when looking at an issue is “What changed recently?”. We found a formal change control system didn’t work well for with our culture of freedom and responsibility, so we deprecated a formal change control process for the vast majority of changes in favor of Chronos. Chronos accepts events via a REST interface and allows humans and machines to ask questions like “what happened in the last hour?” or “what software did we deploy in the last day?”. It integrates with our monkeys and Asgard so the vast majority of changes in our environment are automatically reported to it, including event types such as deployments, AB tests, security events, and other automated actions.

Aminator

Readers of the blog or those who have seen our engineers present on the Netflix Platform may have seen numerous references to baking -- our name for the process by which we take an application and turn it into a deployable Amazon Machine Image. Aminator is the tool that does the heavy lifting and produces almost every single image that powers Netflix.

Aminator attaches a foundation image to a running EC2 instance, preps the image, installs packages into the image, and turns the resultant image into a complete Netflix application. Simple in concept and execution, but absolutely critical to our success. Pre-staging images and avoiding post-launch configuration really helps when launching hundreds or thousands of instances.

Cass Ops

Netflix Cassandra Operations uses Python for automation and monitoring tools. We have created many modules for management and maintenance of our Cassandra clusters. These modules use REST APIs to interface with other Netflix tools to manage our instances within AWS as well as interfacing directly with the Cassandra instances themselves. These activities include creating clusters using Asgard, tracking our inventory with Edda, monitoring Eureka to make sure clusters are visible to clients, managing Cassandra repairs and compactions, and doing software upgrades. In addition to our internally developed tools, we take advantage of various Python packages. We use JenkinsAPI to interface with Jenkins for both job configuration and status information on our monitoring and maintenance jobs. Pycassa is used to access our operational data stored in Cassandra. Boto gives us the ability to communicate with various AWS services such as S3 storage. Paramiko allows us to ssh to instances without needing to create a subprocess. Use of Python for these tools has allowed us to rapidly develop and enhance our tools as Cassandra has grown at Netflix.

Data Science and Engineering

Our Data Science and Engineering teams rely heavily on Python to help surface insights from the vast quantities of data produced by the organization. Python is used in tools for monitoring data quality, managing data movement and syncing, expressing business logic inside our ETL workflows, and running various web applications to visualize data.

One such application is Sting, a lightweight RESTful web service that slices, dices, and produces visualizations of large in-memory datasets. Our data science teams use Sting to analyze and iterate against the results of Hive queries on our big data platform. While a Hive query may take hours to complete, once the initial dataset is loaded in Sting, additional iterations using OLAP style operations enjoy sub-second response times. Datasets can be set to periodically refresh, so results are kept fresh and up to date. Sting is written entirely in Python, making heavy use of libraries such as pandas and numpy to perform fast filtering and aggregation operations.

General Tooling and the Service Class

Pythonistas at Netflix have been championing the adoption of Python and striving to make its power accessible to everyone within the organization. To do this we wrapped libraries for many of the OSS tools now being released by Netflix as well as a few internal services in a general use ‘Service’ class. With this we have helped our users quickly and easily stand up new services that have access to many common actions such as alerting, telemetry, Eureka, and easy AWS API access. We expect to make many of these these libraries available this year and will be around to chat about them at PyCon!

Here is an example of how easily we can stand up a service that has Eureka registration, Route 53 registration, a basic status page and exposes a fully functional Bottle service:

These systems and applications comprise a glimpse of the overall use and importance of Python to Netflix. They contribute heavily to our overall service quality, allow us to rapidly innovate, and are a whole lot of fun to work on to boot!

We’re sponsoring PyCon this year, and in addition to a slew of Netflixers attending we’ll also have a booth at the expo area. If any of this sounds interesting, come by and chat. Also, we’re hiring Senior Site Reliability Engineers, Senior DevOps Engineers, and Data Science Platform Engineers.

Introducing the first NetflixOSS Recipe: RSS Reader

Our goal is illustrate the power of the Netflix OSS platform by showing real life examples. We hope to increase adoption by building out the Netflix OSS stack, increase awareness by holding more Netflix OSS meetups, lower the barriers by working on push button deployments of our recipes in the coming months, etc.

Our goal is illustrate the power of the Netflix OSS platform by showing real life examples. We hope to increase adoption by building out the Netflix OSS stack, increase awareness by holding more Netflix OSS meetups, lower the barriers by working on push button deployments of our recipes in the coming months, etc. “In computing, a news aggregator, also termed a feed aggregator, feed reader, news reader, RSS reader or simply aggregator, is client software or a Web application which aggregates syndicated web content such as news headlines, blogs, podcasts, and video blogs (vlogs) in one location for easy viewing.”

- Archaius: Dynamic configurations properties client.

- Astyanax: Cassandra client and pattern library.

- Blitz4j: Non-blocking logging.

- Eureka: Service registration and discovery.

- Governator: Guice based dependency injection.

- Hystrix: Dependency fault tolerance.

- Karyon: Base server for inbound requests.

- Ribbon: REST client for outbound requests.

- Servo: Metrics collection.

RSS Reader Recipes Architecture

Recipes RSS Reader is composed of the following three major components:

RSS Middle Tier Service

Hystrix dashboard for the Recipes RSS application during a sample run is as shown below:

Getting Started

Public Continuous Integration Builds for our OSS Projects

Building Commits to Master

Verifying Pull Requests

Auto-Generating Build Jobs

AMI Creation with Aminator

Aminator is a tool for creating custom Amazon Machine Images (AMIs). It is the latest implementation of a series of AMI creation tools that we have developed over the past three years. A little retrospective on AMI creation at Netflix will help you better understand Aminator.

Building on the Basics

Very early in our migration to EC2, we knew that we would leverage some form of auto-scaling in the operation of our services. We also knew that application startup latency would be very important, especially during scale-up operations. We concluded that application instances should have no dependency on external services, be they package repositories or configuration services. The AMI would have to be discrete and hermetic. After hand rolling AMIs for the first couple of apps, it was immediately clear that a tool for creating custom AMIs was needed. There are generally two strategies for creating Linux AMIs:- Create from loopback

- Customize an existing AMI.

Foundation AMI

The initial component of our AMI construction pipeline is the foundation AMI. These AMIs will generally be pristine Linux distribution images, but in an AMI form that we can work with. Starting with a standard Linux distribution such as CentOS or Ubuntu, we mount an empty EBS volume, create a file system, install the minimal OS, snapshot and register an AMI based on the snapshot. That AMI and EBS snapshot are ready for the next step.Base AMI

Most of our applications are Java / Tomcat based. To simplify development and deployment, we provide a common base platform that includes a stable JDK, recent Tomcat release, and Apache along with Python, standard configuration, monitoring, and utility packages.The base AMI is constructed by mounting an EBS volume created from the foundation AMI snapshot, then customizing it with a meta package (RPM or DEB) that, through dependencies, pulls in other packages that comprise the Netflix base AMI. This volume is dismounted, snapshotted, and then registered as a candidate base AMI which makes it available for building application AMIs.

“Get busy baking or get busy writing configs” ~ mt

Customize Existing AMIs

Phase 1: Launch and Bake

Our first approach to making application AMIs was the simplest way: customize an existing AMI by first running an instance of it, modifying that, and then snapshotting the result. There are roughly five steps in this launch / bake process.- Launch an instance of a base AMI.

- Provision an application package on the instance.

- Cleanup the instance to remove state established by running the instance.

- Run the ec2-ami-tools on the instance to create and upload an image bundle.

- Register the bundle manifest to make it an AMI.

S3

While functional, this process is slow and became an impediment in the development lifecycle as our cloud footprint grew. As an idea of how slow, an S3 bake often takes between 15 and 20 minutes. The slowness of the creation of an S3 AMI is due to it being so I/O intensive. The I/O involved in the launch / bake process includes these operations:- Download an S3 image bundle.

- Unpack bundle into the root file system.

- Provision the application package.

- Copy root file system to local image file.

- Bundle the local image file.

- Upload the image bundle to S3.

EBS

The advent of EBS backed AMIs was a boon to the AMI creation process. This is in large part due to the incremental nature of EBS snapshots. The launch / bake process significantly improved when we converted to EBS backed AMIs. Notice that there are fewer I/O operations (not to be confused with iops):- Provision EBS volume.

- Load enough blocks from EBS to get a running OS.

- Provision the application package.

- Snapshot the root volume.

Phase 2: Bakery

The AMI Bakery was the next step in the evolution of our AMI creation tools. The Bakery was a big improvement over the launch/bake strategy as it does not customize a running instance of the base AMI. Rather, it customizes an EBS volume created from the base AMI snapshot. The time to obtain a serviceable EC2 instance is replaced by the time to create and attach an EBS volume.The Bakery consists of a collection of bash command-line utilities installed on long running bakery instances in multiple regions. Each bakery instance maintains a pool of base AMI EBS volumes which are asynchronously attached and mounted. Bake requests are dispatched to bakery instances from a central bastion host over ssh. Here is an outline of the bake process.

- Obtain a volume from the pool.

- Provision the application package on the volume.

- Snapshot the volume.

- Register the snapshot.

The Bakery has been the de facto tool for AMI creation at Netflix for nearly two years but we are nearing the end of its usefulness. The Bakery is customized for our CentOS base AMI and does not lend itself to experimenting with other Linux OSs such as Ubuntu. At least, not without major refactoring of both the Bakery and the base AMI. There has also been a swell of interest in our Bakery from external users of our other open source projects but it is not suitable for open sourcing as it is replete with assumptions about our operating environment.

Phase 3: Aminator

Aminator is a complete rewrite of the Bakery but utilizes the same operations to create an AMI:

|  |

Aminator is written in Python and uses several open source python libraries such as boto, PyYAML, envoy and others. As released, Aminator supports EBS backed AMIs for Redhat and Debian based Linux distributions in Amazon's EC2. It is in use within Netflix for creating CentOS-5 AMIs and has been tested against Ubuntu 12.04 but this is not the extent of its possibilities. The Aminator project is structured using a plugin architecture leveraging Doug Hellman's stevedore library. Plugins can be written for other cloud providers, operating systems, or packaging formats.

Aminator has fewer features than the Bakery. First, Aminator does not utilize a volume pool. Pool management is an optimization that we sacrificed for agility and manageability. Second, unlike the Bakery, Aminator does not create S3 backed AMIs. Since we have a handful of applications that deploy S3 backed AMIs, we continue to operate Bakery instances. In the future, we intend to eliminate the Bakery instances and run Aminator on our Jenkins build slaves. We also plan to integrate Amazon’s cross-region AMI copy into Aminator.

Aminator offers plenty of opportunity for prospective Netflix Cloud Prize entrants. We'll welcome and consider contributions related to plugins, enhancements, or bug fixes. For more information on Aminator, see the project on github.

System Architectures for Personalization and Recommendation

In our previous posts about Netflix personalization, we highlighted the importance of using both data and algorithms to create the best possible experience for Netflix members. We also talked about the importance of enriching the interaction and engaging the user with the recommendation system. Today we're exploring another important piece of the puzzle: how to create a software architecture that can deliver this experience and support rapid innovation. Coming up with a software architecture that handles large volumes of existing data, is responsive to user interactions, and makes it easy to experiment with new recommendation approaches is not a trivial task. In this post we will describe how we address some of these challenges at Netflix.

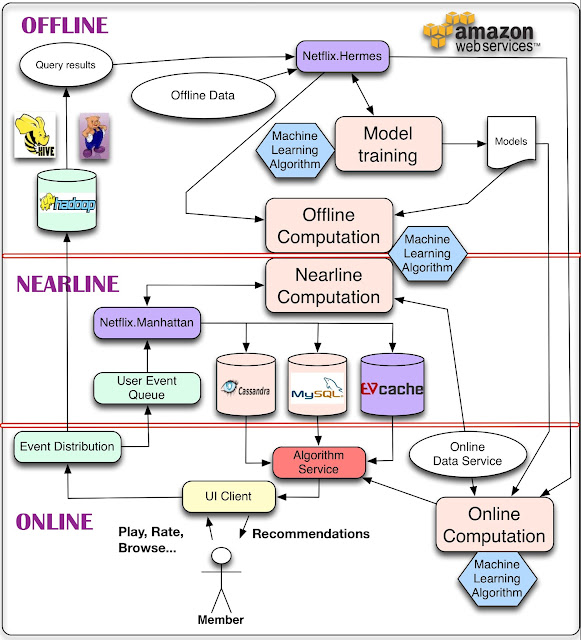

To start with, we present an overall system diagram for recommendation systems in the following figure. The main components of the architecture contain one or more machine learning algorithms.

The simplest thing we can do with data is to store it for later offline processing, which leads to part of the architecture for managing Offline jobs. However, computation can be done offline, nearline, or online. Online computation can respond better to recent events and user interaction, but has to respond to requests in real-time. This can limit the computational complexity of the algorithms employed as well as the amount of data that can be processed. Offline computation has less limitations on the amount of data and the computational complexity of the algorithms since it runs in a batch manner with relaxed timing requirements. However, it can easily grow stale between updates because the most recent data is not incorporated. One of the key issues in a personalization architecture is how to combine and manage online and offline computation in a seamless manner. Nearline computation is an intermediate compromise between these two modes in which we can perform online-like computations, but do not require them to be served in real-time. Model training is another form of computation that uses existing data to generate a model that will later be used during the actual computation of results. Another part of the architecture describes how the different kinds of events and data need to be handled by the Event and Data Distribution system. A related issue is how to combine the different Signals and Models that are needed across the offline, nearline, and online regimes. Finally, we also need to figure out how to combine intermediate Recommendation Results in a way that makes sense for the user. The rest of this post will detail these components of this architecture as well as their interactions. In order to do so, we will break the general diagram into different sub-systems and we will go into the details of each of them. As you read on, it is worth keeping in mind that our whole infrastructure runs across the public Amazon Web Services cloud.

Offline, Nearline, and Online Computation

As mentioned above, our algorithmic results can be computed either online in real-time, offline in batch, or nearline in between. Each approach has its advantages and disadvantages, which need to be taken into account for each use case.

Online computation can respond quickly to events and use the most recent data. An example is to assemble a gallery of action movies sorted for the member using the current context. Online components are subject to an availability and response time Service Level Agreements (SLA) that specifies the maximum latency of the process in responding to requests from client applications while our member is waiting for recommendations to appear. This can make it harder to fit complex and computationally costly algorithms in this approach. Also, a purely online computation may fail to meet its SLA in some circumstances, so it is always important to think of a fast fallback mechanism such as reverting to a precomputed result. Computing online also means that the various data sources involved also need to be available online, which can require additional infrastructure.

On the other end of the spectrum, offline computation allows for more choices in algorithmic approach such as complex algorithms and less limitations on the amount of data that is used. A trivial example might be to periodically aggregate statistics from millions of movie play events to compile baseline popularity metrics for recommendations. Offline systems also have simpler engineering requirements. For example, relaxed response time SLAs imposed by clients can be easily met. New algorithms can be deployed in production without the need to put too much effort into performance tuning. This flexibility supports agile innovation. At Netflix we take advantage of this to support rapid experimentation: if a new experimental algorithm is slower to execute, we can choose to simply deploy more Amazon EC2 instances to achieve the throughput required to run the experiment, instead of spending valuable engineering time optimizing performance for an algorithm that may prove to be of little business value. However, because offline processing does not have strong latency requirements, it will not react quickly to changes in context or new data. Ultimately, this can lead to staleness that may degrade the member experience. Offline computation also requires having infrastructure for storing, computing, and accessing large sets of precomputed results.

Nearline computation can be seen as a compromise between the two previous modes. In this case, computation is performed exactly like in the online case. However, we remove the requirement to serve results as soon as they are computed and can instead store them, allowing it to be asynchronous. The nearline computation is done in response to user events so that the system can be more responsive between requests. This opens the door for potentially more complex processing to be done per event. An example is to update recommendations to reflect that a movie has been watched immediately after a member begins to watch it. Results can be stored in an intermediate caching or storage back-end. Nearline computation is also a natural setting for applying incremental learning algorithms.

In any case, the choice of online/nearline/offline processing is not an either/or question. All approaches can and should be combined. There are many ways to combine them. We already mentioned the idea of using offline computation as a fallback. Another option is to precompute part of a result with an offline process and leave the less costly or more context-sensitive parts of the algorithms for online computation.

Even the modeling part can be done in a hybrid offline/online manner. This is not a natural fit for traditional supervised classification applications where the classifier has to be trained in batch from labeled data and will only be applied online to classify new inputs. However, approaches such as Matrix Factorization are a more natural fit for hybrid online/offline modeling: some factors can be precomputed offline while others can be updated in real-time to create a more fresh result. Other unsupervised approaches such as clustering also allow for offline computation of the cluster centers and online assignment of clusters. These examples point to the possibility of separating our model training into a large-scale and potentially complex global model training on the one hand and a lighter user-specific model training or updating phase that can be performed online.

Offline Jobs

Much of the computation we need to do when running personalization machine learning algorithms can be done offline. This means that the jobs can be scheduled to be executed periodically and their execution does not need to be synchronous with the request or presentation of the results. There are two main kinds of tasks that fall in this category: model training and batch computation of intermediate or final results. In the model training jobs, we collect relevant existing data and apply a machine learning algorithm produces a set of model parameters (which we will henceforth refer to as the model). This model will usually be encoded and stored in a file for later consumption. Although most of the models are trained offline in batch mode, we also have some online learning techniques where incremental training is indeed performed online. Batch computation of results is the offline computation process defined above in which we use existing models and corresponding input data to compute results that will be used at a later time either for subsequent online processing or direct presentation to the user.

Both of these tasks need refined data to process, which usually is generated by running a database query. Since these queries run over large amounts of data, it can be beneficial to run them in a distributed fashion, which makes them very good candidates for running on Hadoop via either Hive or Pig jobs. Once the queries have completed, we need a mechanism for publishing the resulting data. We have several requirements for that mechanism: First, it should notify subscribers when the result of a query is ready. Second, it should support different repositories (not only HDFS, but also S3 or Cassandra, for instance). Finally, it should transparently handle errors, allow for monitoring, and alerting. At Netflix we use an internal tool named Hermes that provides all of these capabilities and integrates them into a coherent publish-subscribe framework. It allows data to be delivered to subscribers in near real-time. In some sense, it covers some of the same use cases as Apache Kafka, but it is not a message/event queue system.

Signals & Models

Regardless of whether we are doing an online or offline computation, we need to think about how an algorithm will handle three kinds of inputs: models, data, and signals. Models are usually small files of parameters that have been previously trained offline. Data is previously processed information that has been stored in some sort of database, such as movie metadata or popularity. We use the term "signals" to refer to fresh information we input to algorithms. This data is obtained from live services and can be made of user-related information, such as what the member has watched recently, or context data such as session, device, date, or time.